Connecting to Google BigQuery

Configure Google BigQuery in Tellius with service accounts, permissions, and support for tables, views, external data, and SQL materialization.

Loading from table

Loading from external tables

Loading from View

Loading from SQL query

Pre-requisites for configuring BigQuery in Google Cloud

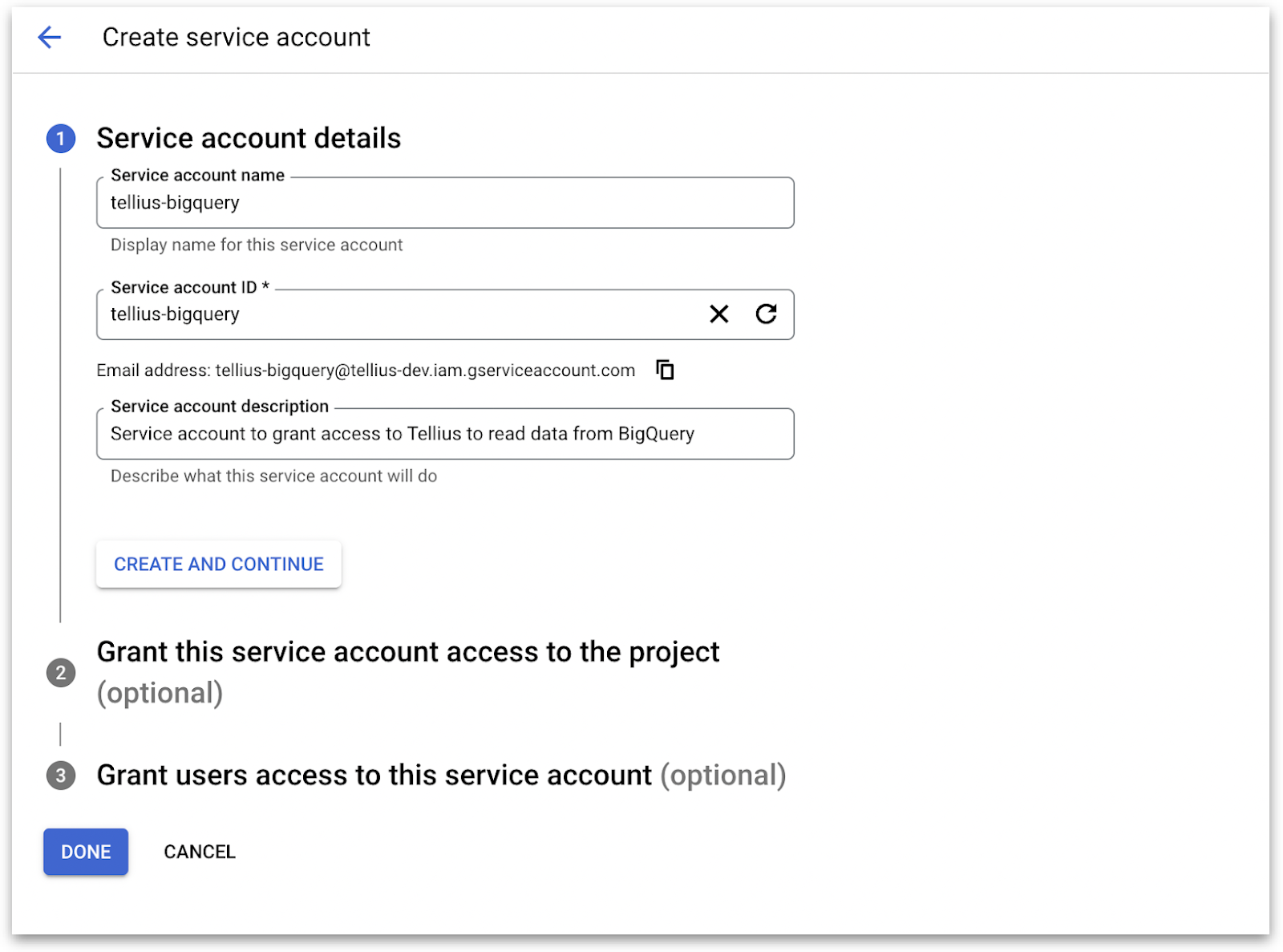

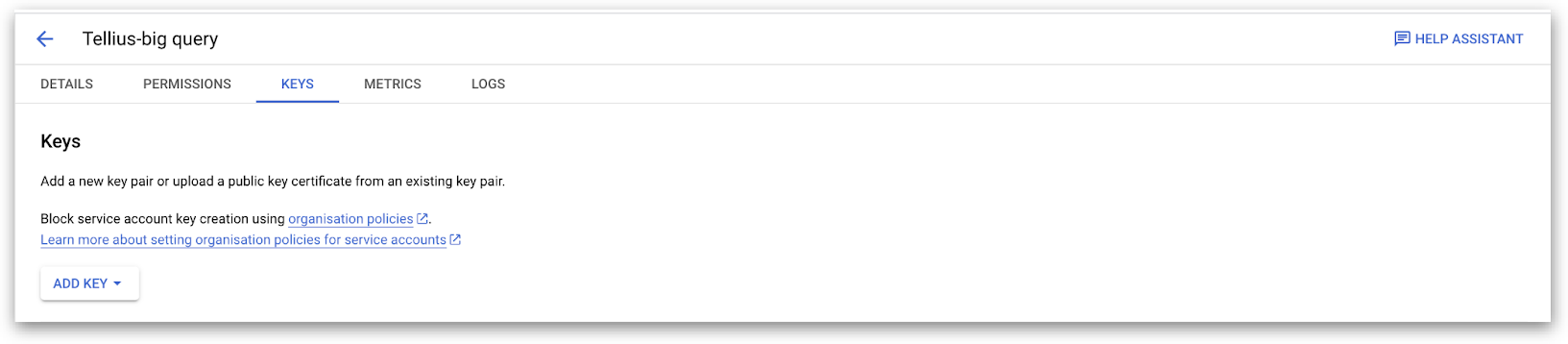

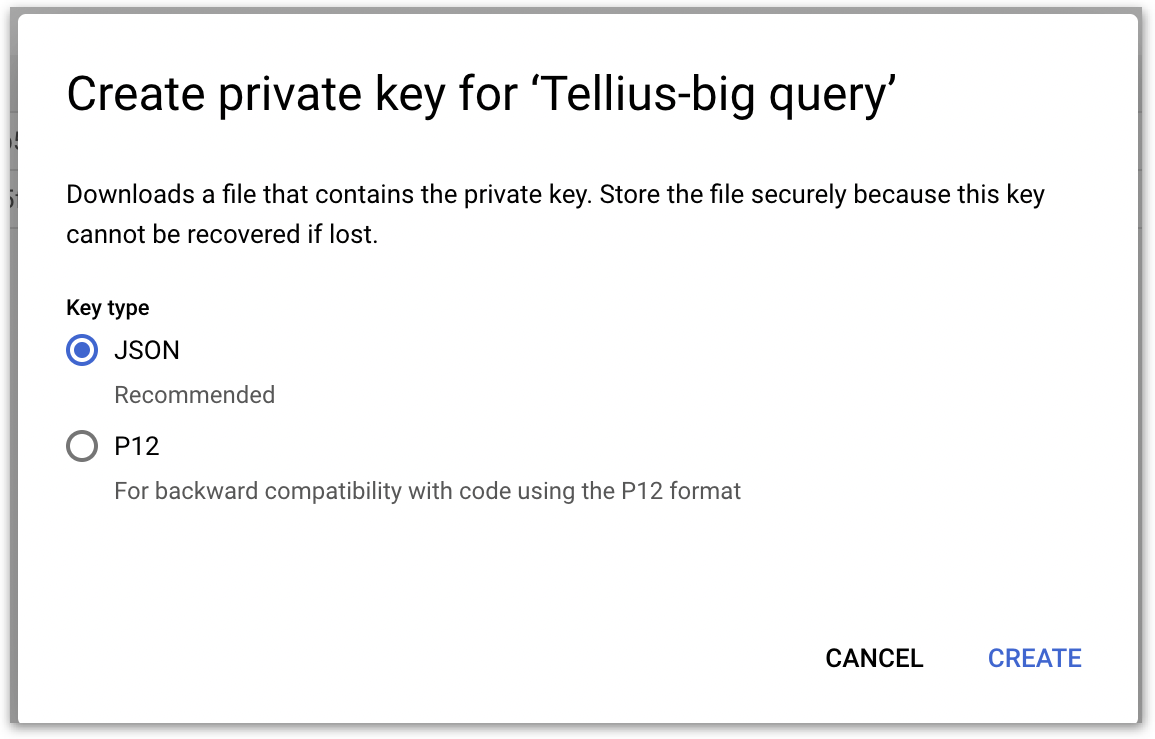

Service Account Creation

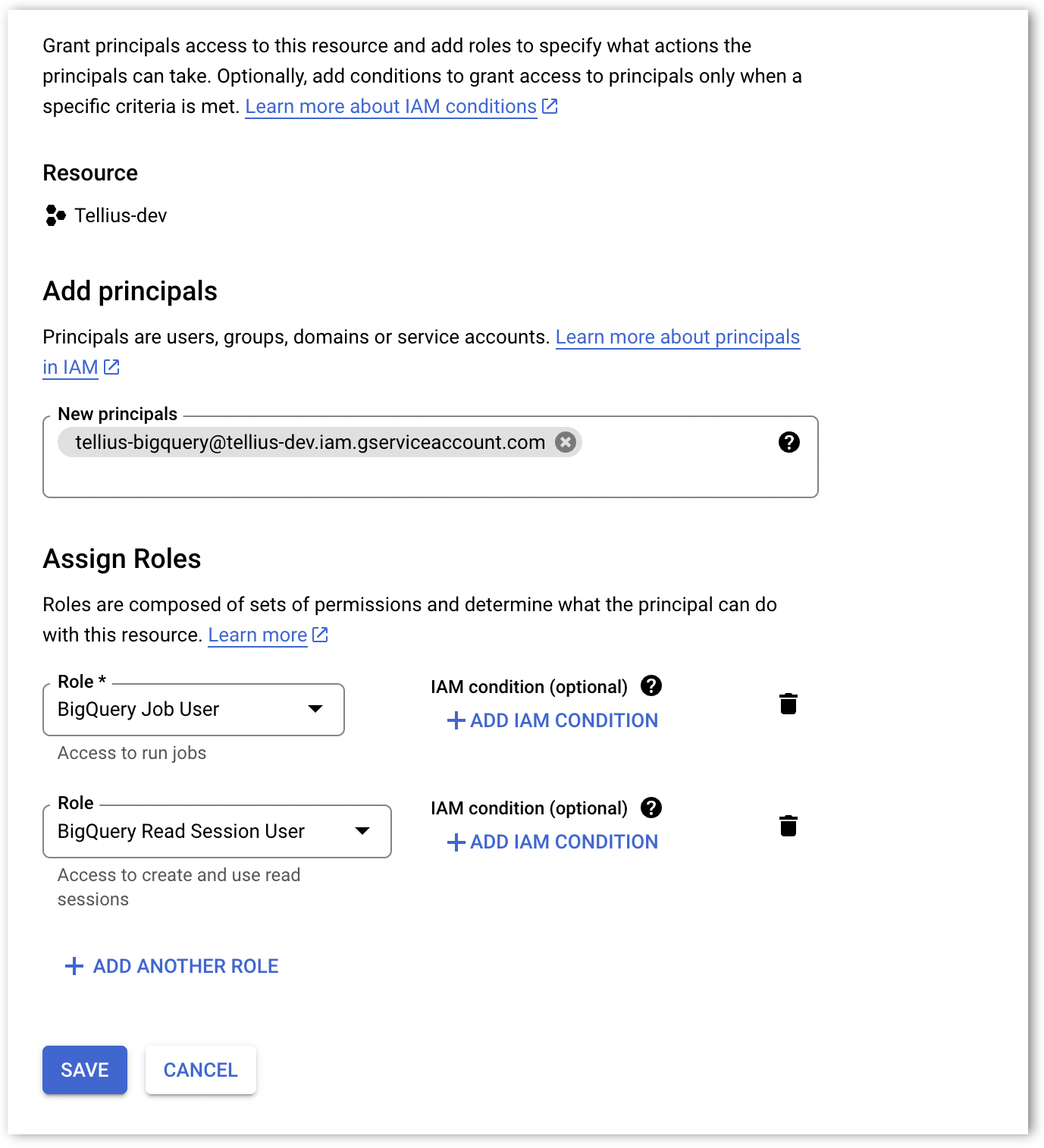

Project Level Permissions

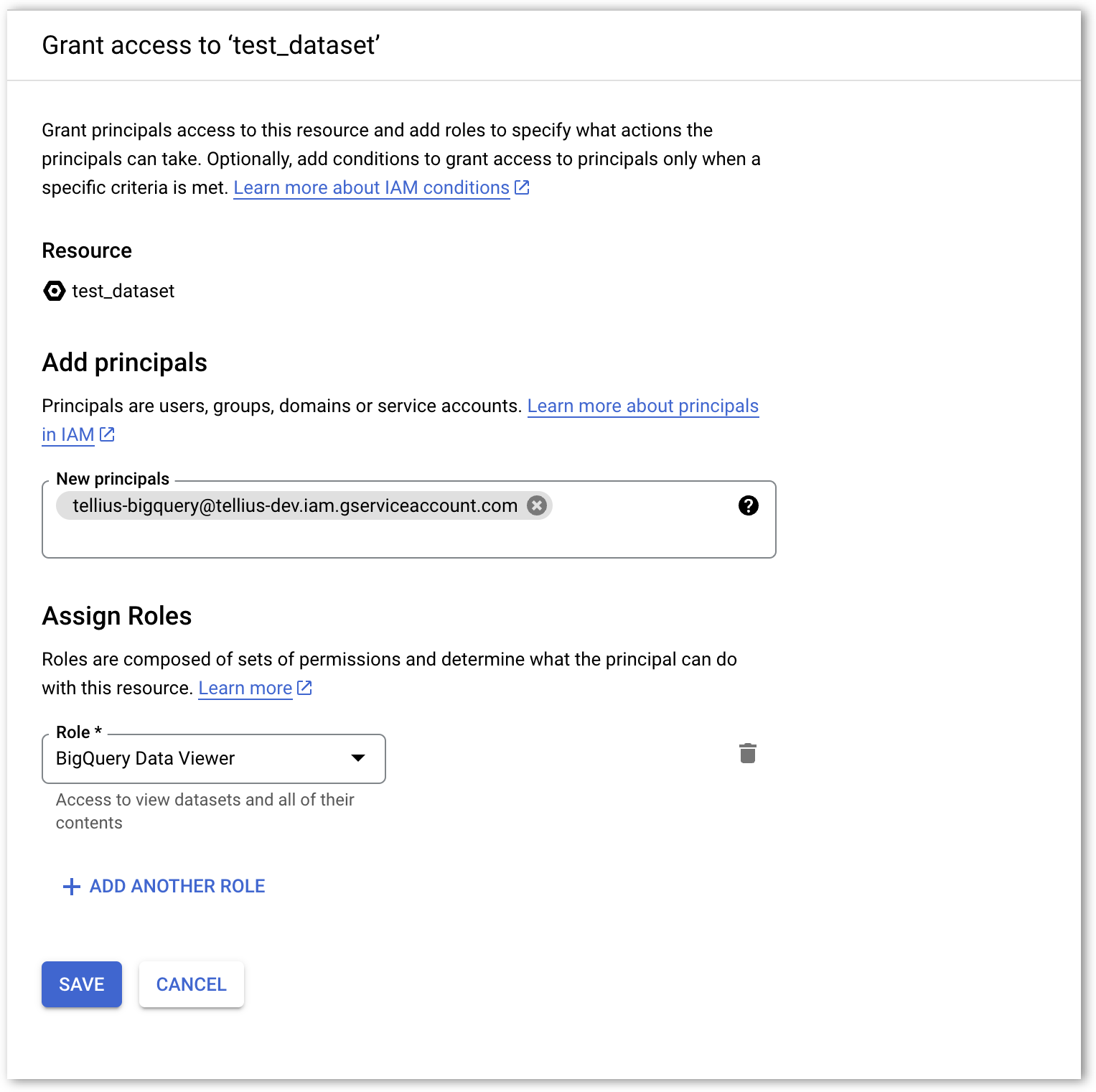

Dataset Level Permissions

Last updated

Was this helpful?