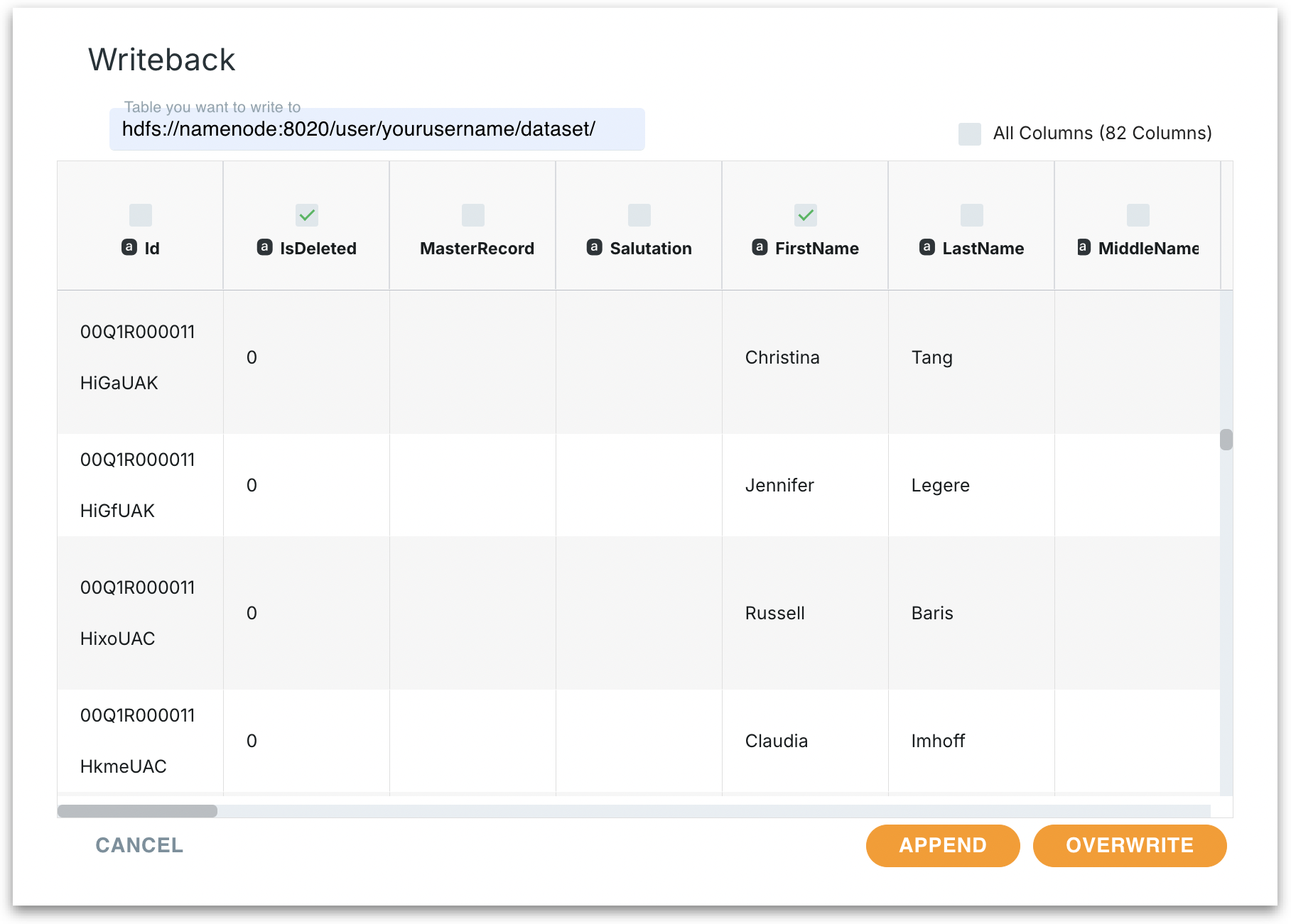

✍️Writeback window

Customize how you export datasets with flexible column selection, preview options, and overwrite or append modes to publish refined data back to your chosen system.

This “Writeback” window is where you finalize your dataset export. You pick which columns to include, preview your data for sanity checks, specify whether you’re appending or overwriting existing content, and confirm the path. This flexible approach ensures you can customize exactly how your dataset is published back into the required connector.

Writeback eliminates the need for switching between applications to make data updates. It allows you to edit directly within the platform. Team members can add or update records directly, fostering effective team collaboration.

Clicking on the Export icon under Data → Prepare → Data shows the following list of possible export targets.

CSV (Local export): Generates a CSV file that you can download or store locally.

HDFS (Write-back to Hadoop): Writes the dataset back into your Hadoop Distributed File System. This can be a path like

hdfs://namenode:8020/user/username/export_path/where you ensure Tellius has the right HDFS credentials/permissions to write to that location.Snowflake/ Redshift/ MySQL/ Oracle/ other connectors: (Write back) Indicates various Snowflake or similar warehouse connectors. They let you push data to cloud or on-prem databases, enabling deeper integration with your enterprise data environment. By selecting the appropriate connector, you effectively choose where and how to store your curated dataset once you’re done preparing it in Tellius.

Path/Table you want to write to: Allows you to specify exactly where the new exported file or table should reside in the target system. For example, with HDFS, you provide the HDFS URI (hdfs://namenode:8020/...) and subdirectory path. For other connectors, this would be a table name.

All columns: Every column in the dataset will be exported. You can also selectively include or exclude columns for the export.

Append: Adds these rows at the end of an existing file or table (if the connector supports it). Useful if you’re incrementally adding new records to a historical dataset.

Overwrite: Completely replaces any existing file or table at the path with this new dataset. Overwriting is handy if you want to refresh the entire dataset from scratch in the target system.

The table will be updated and a message will be displayed informing the refresh of the dataset.

The presence of connectors indicates that Tellius can function as not just an ingestion/analysis platform but also a data integration hub. You can take the curated or transformed dataset and place it back into a system where:

Other tools can read or analyze it.

It can be versioned or integrated with an existing data lake or warehouse.

You can set up downstream processes—like additional transformations, ML pipelines, or data sharing.

Best practices

CSV is ideal for local, smaller exports or quick data sharing. HDFS or Snowflake or other connectors is typically better for high-volume scenarios.

Write-back requires that the user or system has correct credentials, roles, or grants (e.g., permission to create or replace tables, or to write to an HDFS path).

Depending on the connector, you may choose to overwrite an existing table/path or append rows. For warehouse exports, check if there’s an existing table with the same name that might be overwritten.

Large datasets can take time to write. Some connectors have file-size or row-count constraints. Keep an eye on the Notifications tab.

For example, if you have a list of customers and you want to add more without changing the existing customers' details, use the append operation. Or, if you have an outdated list of customers and you have a new list, you might choose to overwrite the old list with the new one.

Was this helpful?