Python Transform

Python Transform in Tellius lets you create, edit, and apply Pandas or PySpark code to cleanse, enrich, and engineer data—validate, run, and save.

Python (whether PySpark or Pandas) is more flexible for applying complex business rules, iterative or row-level manipulations, or advanced text processing. You get access to Python libraries for machine learning, data wrangling, or NLP. For instance, you might import sklearn for classification or re for regex-based text cleansing. Python is ideal For:

Advanced data science, feature engineering, custom ML transformations, or unusual data-cleaning logic.

If you need loops, complex conditionals, or string manipulations that are easier to write in Python than SQL.

If you use PySpark, transformations can run in a distributed environment for very large datasets.

Tellius provides you to use Python option to:

Cleanse your data of invalid, missing, or inaccurate values

Modify your dataset according to your business goals and analysis

Enhance your dataset as needed with data from other datasets

Pick Python if:

You need advanced logic that’s awkward in SQL—like heavy string manipulation, complex conditionals, or specialized data-science libraries.

You’re comfortable coding in Python and want direct access to packages (e.g., Pandas, PySpark, NumPy).

You have iterative or row-by-row transformations that don’t translate neatly into SQL statements.

Following are some of the examples to help you get started:

def transform(dataframe):

# use 8 spaces for indentation

resultDataframe = dataframe.where(dataframe[‘Payment_Type’] == ‘Visa’)

return resultDataframeCreating and applying Python code

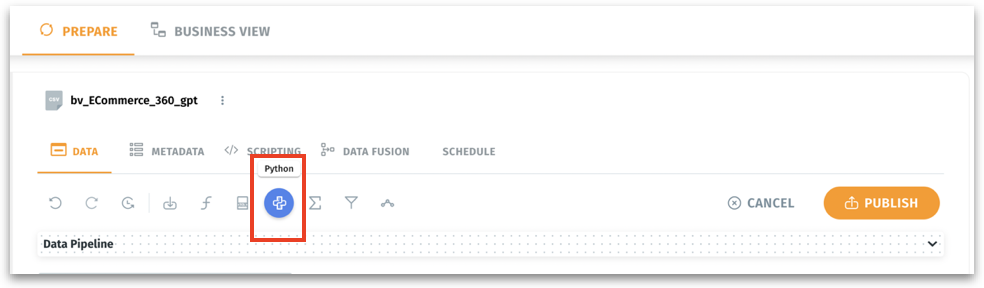

Navigate Data → Prepare → Data.

Select the required dataset and click on Edit.

Above Data Pipeline, click on the Python option.

To view the list of columns available in the selected dataset, click on Column List tab.

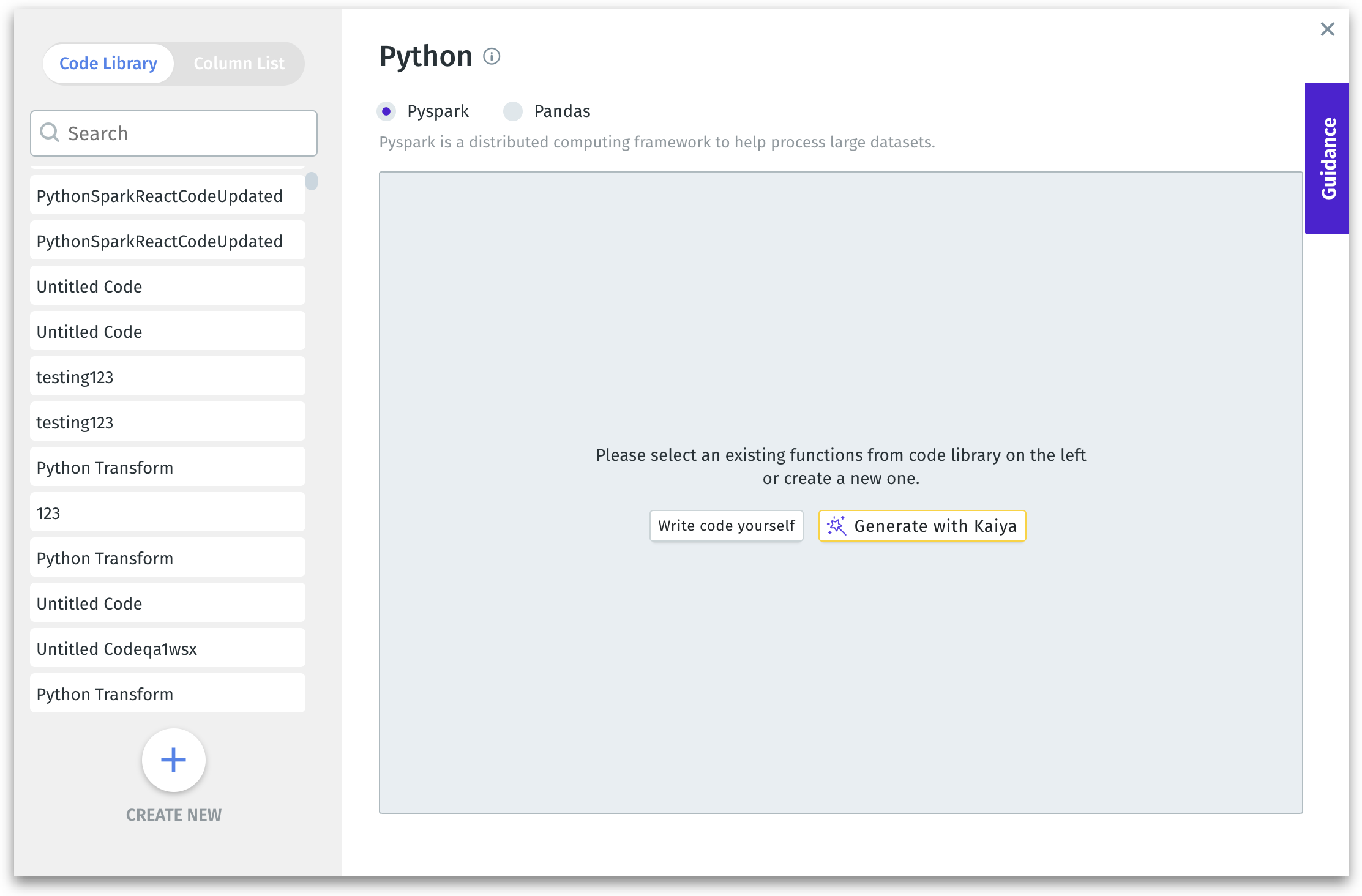

Select the required Python framework: Pyspark or Pandas.

When working with datasets too large to fit into memory on a single machine.

If your data processing needs to be parallelized across multiple nodes for performance.

For processing cluster-based workloads stored in distributed environments (e.g., Hadoop, AWS S3, or large data warehouses).

Ideal for operations on terabytes/petabytes of data.

For small to medium data. When your dataset fits into memory on a single machine.

For quick, iterative data exploration and manipulation.

Simpler syntax and user-friendly APIs for data cleaning, transformation, and visualization.

Ideal for non-distributed workloads where performance isn’t a concern.

To create new code, click on Create New or Write code yourself button.

Alternatively, click on Generate with Kaiya button to make Tellius Kaiya generate the required for you.

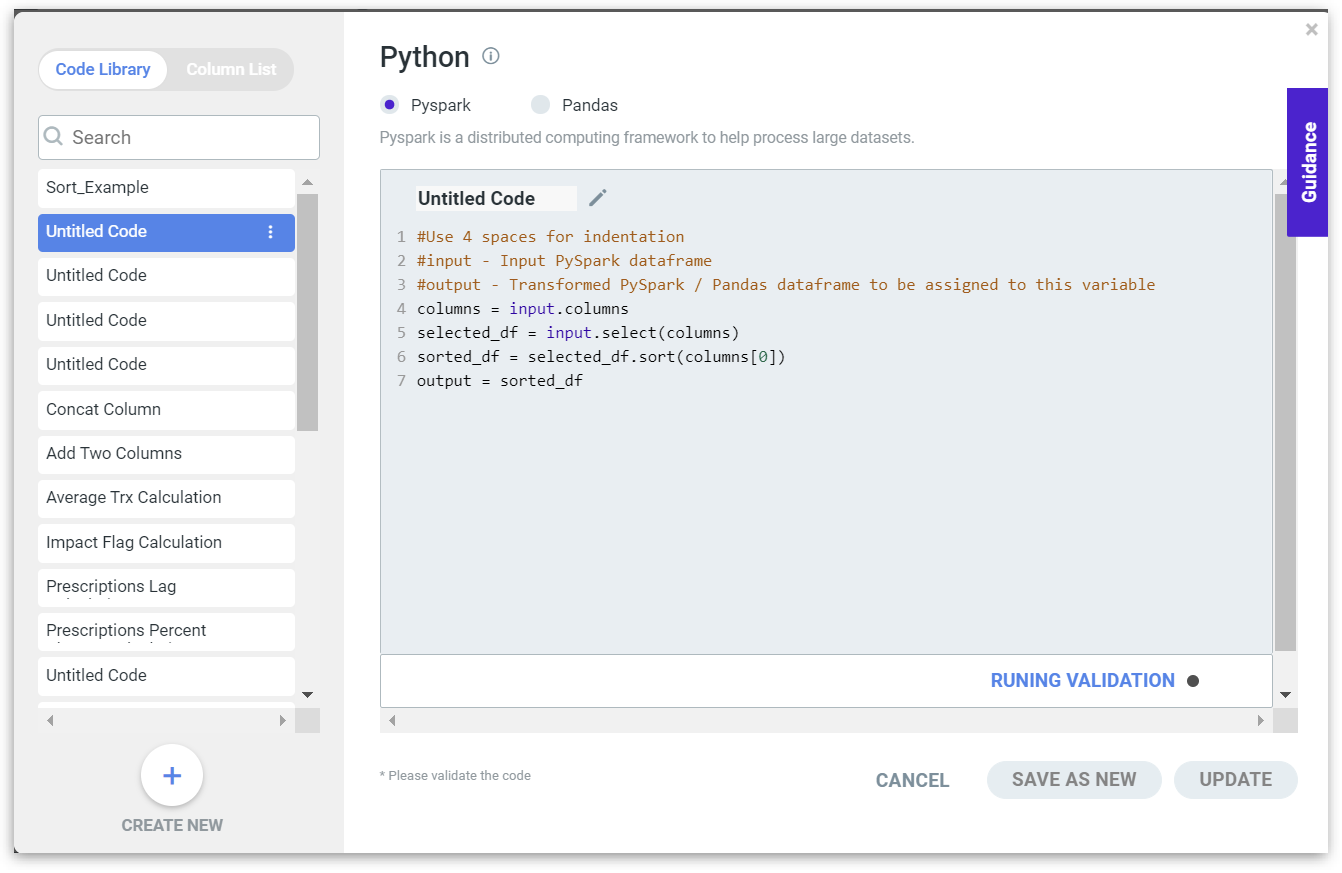

Once the code is ready, click on Run Validation to validate the code. When the validation is in process, the Running Validation message is displayed.

Tellius validates the entered query, and if any errors are found, they will be displayed in the bottom section of the window.

If the code is correct, the validation result is shown with a Successfully Validated message at the top.

After clearing the errors, click on Apply to apply the code to the dataset or click on Save in Library to save to the code library in the left pane. Or, click on Cancel to discard the code window.

From v4.2, users can apply the code to the dataset without saving it to the code library first.

Editing Python code

In the Python code window, search and select the required code from the already existing Code Library.

Click on Edit to modify and validate the code.

Click on Run Validation to validate the code. When the validation is in process, the Running Validation message is displayed.

Tellius validates the entered query, and if any errors are found, they will be displayed in the bottom section of the window.

If the code is correct, the validation result is shown with a Successfully Validated message at the top.

Click on Apply button to apply the Python query to the dataset.

Click on Update to update the code, and click on Save as New.

The following libraries have been removed and thus cannot be imported into Python during data preparation. If any of the following libraries are imported, it will result in a Validation failed error. - shlex - sh - plumbum - pexpect - fabric - envoy - commands - os - subprocess - requests

Was this helpful?